Belfry.io - Dirty talk, how can I fight thee?

Dirty words. We all know them. They are inappropriate. They are coarse. They are offensive. We were taught not to use them, especially when speaking to people we don't know, but we just can't help it. I'm not sure what makes them so irresistible. Maybe saying them helps channel that outburst of anger you might experience when people don't use indicators, when the wi-fi is slow, or when you step in dog poop.

In this blog post, I won't get into the psychology of swearing but I will concentrate on the issues that one should address before building a system which automatically identifies and categorizes vulgar language in a text.

1. What makes a word dirty?

Before we start looking at actual swearwords, let's take a step back and try to define what vulgar word is.

According to the Oxford Dictionary (look it up here), vulgar language lacks sophistication or good taste, and often makes reference to sex or bodily functions.

The dictionary definition is quite accurate (well done OD) but somewhat vague. Do expressions like aint or should of lack sophistication? Many would say yes. And what about the words digest or respiration, they describe bodily functions for sure but no one in their right mind would advise against using these words. And even if we require the presence of both criteria, it is still difficult to decide whether hell, effing, bloody, or holy cow are vulgar or not.

The reason why it's so hard to come up with a list of dirty words we can all agree on is due to the following problems:

- TIME

Language changes over time. New words may evolve and older words might fall out of fashion, which means any list has to be updated from time to time. For the interested, here is an list of a few old-fashioned insults some of which are real gems. - SPACE

There are regional differences in how people use a language, and regional variability characterizes dirty words too. This awesome blog post shows with maps how people in the US are united when it comes to swearing. - MULTIPLE MEANINGS

Many words have unrelated meanings. Cock can be a bird, an ass can be a donkey, Dick can be a male given name, and even tit can refer to a songbird. On the other hand, the most innocent words like anaconda, pearl necklace, or cream can have dirty connotations. - DIFFERENT SENSITIVITY

We all have different tolerances to swearing, which can be unique to us. Some might use fuck instead of full stops, while for others the strongest dirty word they can think of is crap.

The sad truth is that almost anything can be vulgar or offensive in the right context. So, how can you teach a computer to detect vulgarity ? Well, I didn't say it would be easy if you want to do it right, but it's far from impossible.

2. Where to start?

As we have seen in the previous section, the biggest problem with vulgar expressions (and with language in general) is variability. There are just too many factors that determine language use, so anyone, who tries to create a computer application concerning human language, has to decide on how general or specific the model is going to be.

In one extreme case, the model may only detect the most obvious examples, which for vulgarisms would mean the most common swear words that everybody considers filthy. In a classic stand-up comedy show, George Carlin gave an excellent example of such an approach. His list, which became famous as "The Seven Words You Can Never Say on Television", contains only those words that are vulgar 100% of the time (*according to Mr. Carlin). Although the list dates back to the 70s, Mr. Carlin's selection with shit, piss, fuck, cunt, cocksucker, motherfucker, and tits (plus fart) may still hold. (Watch the show here.)

However, if the task is to decide whether a given text is vulgar or not, detecting only the most filthy and obviously vulgar words will not result in a very helpful system as it will fail to detect a lot of dirty expressions and leave a lot of work for the human using the system. The alternative approach would be to build systems that are fine-tuned to the language usage and preferences of individual users. Although "personal assistant" type of computer systems might be the ultimate goal of the field, collecting enough data for the creation of such a system is expensive and extremely time consuming.

When someone is about to start building a system that automatically detects vulgar text, it might be best to choose a road somewhere in the middle. It is worth starting out with a general model, which tries to grab the average (i.e. what most people would agree on) in the baseline model. In the long run, however, the system should be customizable, so it is imperative to design it as a flexible framework, which is able to incorporate data generated by the users and learn from its mistakes.

3. How to grab the average? - Creating a baseline model

When embarking on a new project, it is always a good tactic to start with reviewing what other people have done in the same area. In the case of vulgarisms, the chief resources are word lists. There are plenty of collections of dirty expressions. I'll review some of the most comprehensive and well-known resources and point out their strengths and weaknesses.

3.1 Dirty word lists on the Internet

Noswearing.com

Probably the most widely used swearword dictionary for computer applications is on noswearing.com, which even has an API. There are aroud 350 words on the list, most of which refer to sexual acts, bodily functions, and body parts. But there is a surprisingly large amount of derogatory expressions for different ethnicities, and words that question the intelligence of others. The greatest advantage of this dictionary is that all of the words are checked and it doesn't only list dirty words but gives their meaning too, making it possible to group them based on topics. Fun fact: the most popular meaning in the dictionary is idiot, which can be communicated with 75 different insults, followed by almost 70 words referring to homosexuals. The list, however, doesn't give any information about how common an expression is or how insulting it might be.

Wikipedia

There is hardly a topic that Wikipedia hasn't contributed to and vulgar words are no exception either. The category, English vulgarities, is a metaclass of Wiktionary, which automatically lists all the words that have a dirty meaning or might have a vulgar connotation. The list is immense. Last time I checked there were just over 1,500 words that got the vulgar tag.

Unfortunately, the biggest disadvantage of Wikipedia is also its biggest advantage: it is a community project. Anybody can contribute to Wiki, which means that a lot of different people are able to share their opinion, but it is very difficult to check the validity of the content. Moreover, the automatically generated Wiki Vulgarity List doesn't give any additional information about the words on its own. So if you would like to figure out whether a word is primarily vulgar or only has a marginally vulgar connotation in the 17th sense of the word in Southern New Zealand, you have to do a lot of cleaning and fact-checking, or consult other sources.

All in all, Wikipedia is a great resource but it has to be used with caution.

The Online Slang Dictionary

The Online Slang Dictionary is a proprietary initiative, which is great mixture of traditional dictionaries and community projects. It doesn't only give the meaning of a word but it enables the readers to vote on how common a slang expression is or how vulgar it is. Unfortunately, the content can appear on the website without proper monitoring, thus some of the entries are so idiosyncratic that probably the only person who knows the word's meaning is the one who submitted it. However, if enough people vote for a word, it is easy to spot any discrepancies. For example, the 6th most vulgar expression on the top 100 vulgar words list of The Online Slang Dictionary is rusty trombone, which is probably not that well-known given that 1021 out of 1326 voters said they have never heard this expression (at least in its vulgar sense) before.

All the lists I reviewed in this section are great resources, but they all have clear limitations too. In the next section, I'll experiment by creating a blend of the different lists and use it as a baseline model in order to get the most out of them and to overcome their weaknesses.

3.2 Creating an improved word list

As I would like to grab the average and not the unique or the idiosyncratic, and I would definitely like to avoid heavily relying on my own personal linguistic judgment, I decided to cross-reference the lists described in the previous section and keep only those words, which appear on at least two of them.

I also wanted to take into consideration the degree of vulgarity associated with the expressions (is that expression extremely vulgar or just a bit?) and their usage measures (how rare or well-known is that word?). Since I'm not a native English speaker, I decided to use the ratings collected on The Online Slang Dictionary for that purpose.

In the end, I came up with a list of 583 vulgar words, which had both vulgarity indexes and usage ratings. Despite all the effort, there were still some mistakes in the resulting word list. Common words like anaconda or cheese, which could have vulgar connotations in the right context but their primary meanings are quite innocent things, were also selected. So I decided to remove those ~100 words, which only had a secondary vulgar meaning.

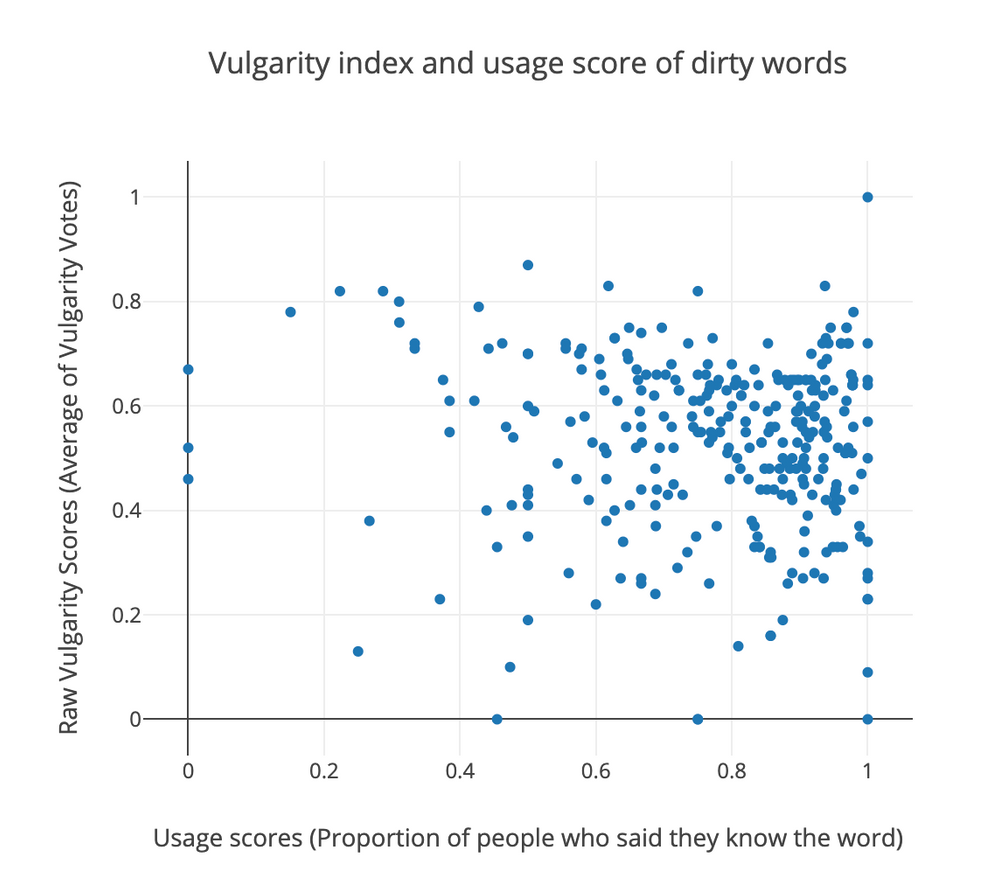

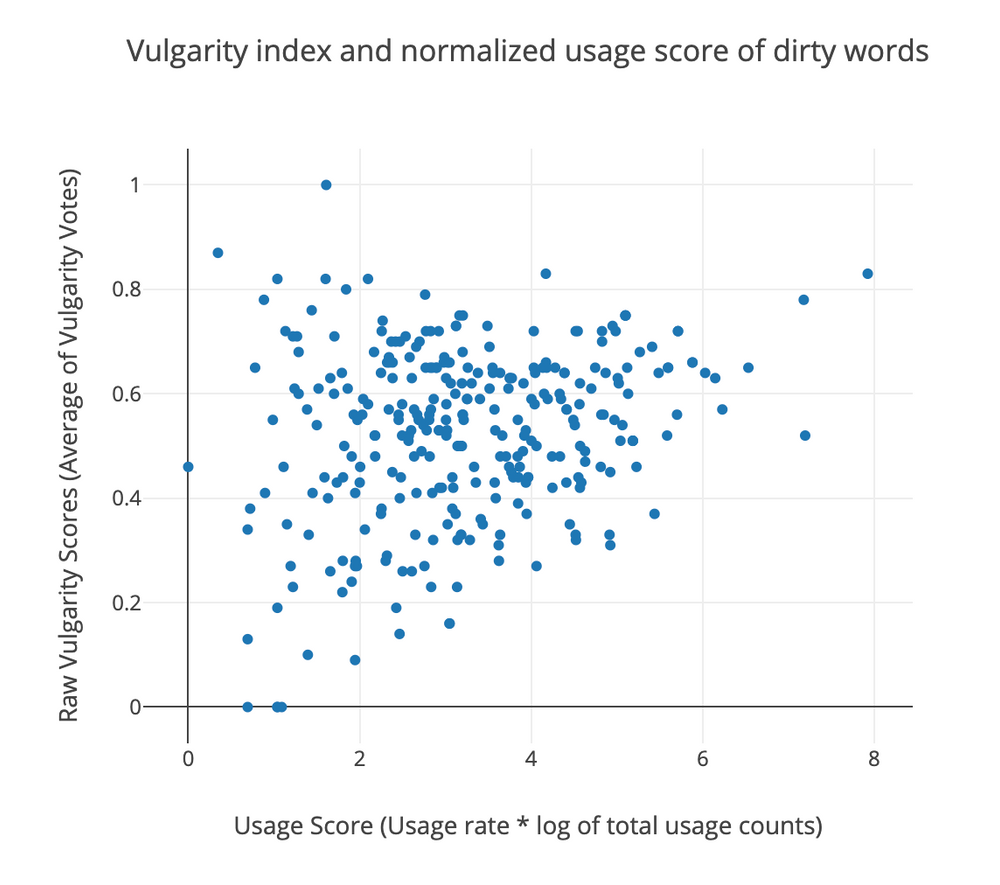

After cleaning up the list and removing derivatives of frequent swearwords (e.g. if fuck and fucking were both in the dictionary, the latter entry was removed), there were 303 vulgar expressions left. The expressions in the final list are plotted in the figure below.

The plot shows the raw vulgarity index on the y axis and the proportion of the people who said they knew that vulgar meaning is on the x axis. In an ideal world, we could stop with the preprocessing here and only work with the terms that appear in the upper left corner of the plot (i.e. which are known by most people and got a reasonably high vulgarity score.

The reality, however, is not that simple. Since the plot above only shows averages and proportions, it doesn't give us any information about how many people voted for each given term. Actually, the word with the best scores (in the upper right-hand corner of the plot) is wankered, which only got one vote.

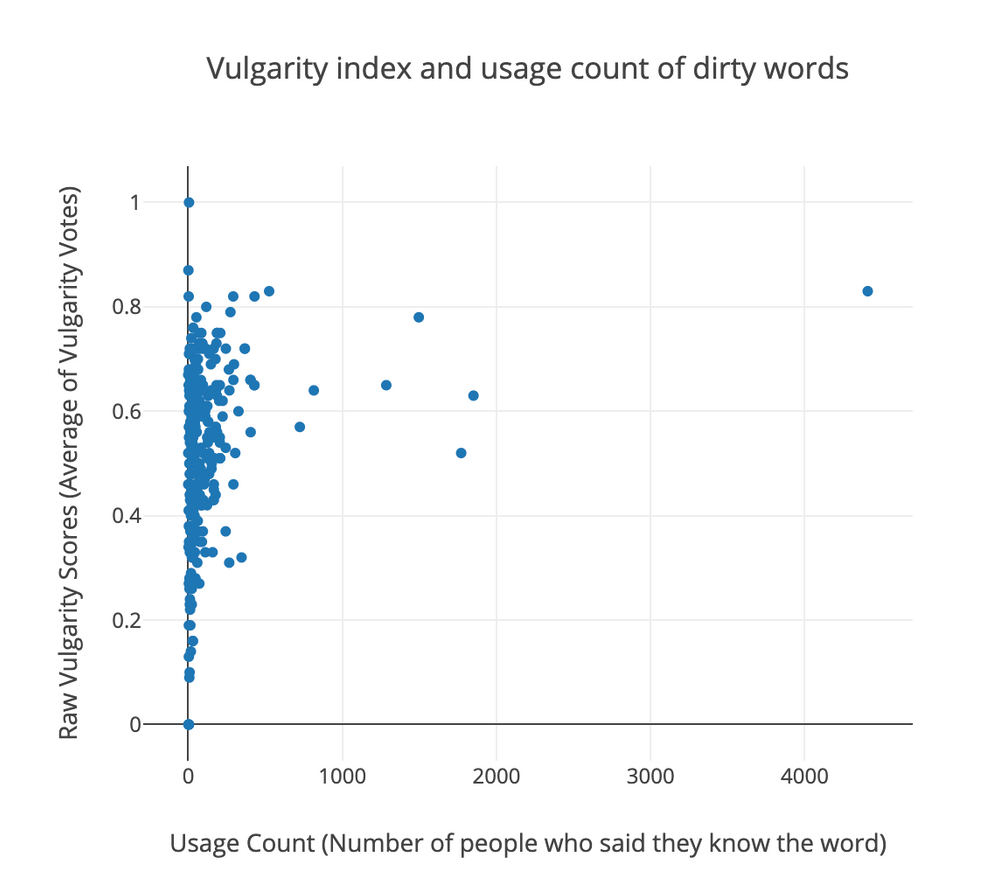

Let's see what we can do to fix this. Start by inspecting the raw counts of how many people said they knew a given vulgar word. In the figure below, the "known" vote counts are plotted on the x axis and the vulgar scores are on the y axis.

The results are rather skewed which shouldn't come as a surprise since count data usually has a few extremely high values but most of the data points are flocking around the lower values. To correct for this, I normalized the usage counts and multiplied the "known"-rate (how many of the voters said that they knew the term) with the natural log of the counts.

Even in the normalized plot, there is a clear trend in the data set: the words that got lower usage scores (i.e. fewer people voted for them) have more varied vulgarity scores. From a practical point of view, it means that the words which get a higher number of votes are more likely to be close to the true average of the populations, thus are more reliable.

3.3 Testing the baseline model

So far so good, if we give prominence to the vulgarity scores based on the usage measures and the number of votes, we'll end up with a relatively reliable baseline model. At least in theory. There is nothing left but to test the theory.

For my baseline model, I used the following formula to calculate the final vulgarity score of individual words:

(1)``Vulg_Score = Vulg_Ave * Known_Rate * log(Total_votes)``

where Vulg_Ave is the average of vulgarity votes on The Online Slang Dictionary, the Known_Rate is the ratio of people who voted they knew the given term from all the people who voted, and the Total_votes are the total number of votes given for a word in the usage category on The Online Slang Dictionary.

To test the baseline model, we downloaded 400 random comments from YouTube news videos. (The comments were collected at the end of September, 2016). First, the application split the raw texts into words and frequent collocations (i.e. tokens) and then it looked for any occurrences of those words or expressions which were in the dictionary. At this point, the biggest challenge was to make the system recognize vulgar words in all possible forms in the text: regardless of the word's grammatical form, whether the word was misspelled or it appeared in a unique compound (e.g. fuck and shit are extremely productive, they can be attached to almost anything).

After identifying all the vulgar terms in the comments, I calculated the vulgarity score of the individual words based on the formula above. If there were multiple dirty words in a comment, the vulgarity scores were added up. The results are shown in the figure below. Despite the varying y values, this figure is a one dimensional plot, which shows the vulgarity scores for the individual comments on the x axis. The y values are only random noise, which were introduced in order to make the plot more readable.

Most of the comments (77%) were predicted to be non-vulgar according to the baseline model. The biggest advantage of the model is that it is able to measure the degree of vulgarity and not just whether a text is vulgar or not. The main purpose of the model, however, is to mimic human behavior, i.e. to predict whether most people would consider a given text to be vulgar or not. Thus, it is essential to compare the performance of the model to people's judgment.

In a little experiment that we conducted, the same 400 YouTube comments were annotated by 2 people, who had to decide whether a comment was "Not Vulgar (0)", "Moderately Vulgar (1)", or "Vulgar (2)". The scores given by the two annotators were quite close to each other: they agreed 93% of the time, and the inter-rater reliability was also high (Cohen's kappa = 0.8). For a unified measure, the annotators' scores were averaged, but those comments, which got a "Not Vulgar" label from one of the annotators and "Vulgar" from the other were removed from the corpus (there were three such comments).

To test how well the baseline model was able to predict the labels given by the annotators, I fitted a logistic regression model with scikit-learn. The predictor was the vulgarity score given by the baseline model, and the outcome was the annotators' judgment. The vulgarity scores of the model were used as continuous variables, while the annotator labels were treated as ordinal data.

Here are the most important parameters of the logistic regression model:

LogisticRegression(C=100000.0, class_weight=None, dual=False, fit_intercept=True, intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1, penalty='l2', random_state=None, solver='liblinear', tol=0.0001, verbose=0, warm_start=False) Intercept: [ 4.54397979 -2.83450681 -4.38925666] Coefficients: [-1.9811506478389251, 0.040611778006007772, 1.0461458566513961]

The accuracy and the F1 score of the logistic regression were 0.91 and 0.89 respectively, which means that the statistical model could predict the annotators' labels quite well from the vulgarity scores given by the baseline model. So the humans and the machine mainly agreed on which texts were vulgar and which were not.

However, the statistical test also showed that the annotators and the baseline model mainly agreed on the extreme cases: whether a given text was not vulgar (0) or vulgar (2). It is also evident from the last graph that only a few data points fell in the "Moderately Vulgar" category according to the annotators. I don't want to go into the details of why vulgarity seems to be a binary "yes-or-no" category for our annotators, but it is a very interesting difference between the human and the computer measures, which may be worth looking into. The difference might just be due to the small size of the data set, but it may also be that it shows something very interesting about human perception or categorization.

4. Endnote

In this blog post, I tried to show how one might begin approaching the problem of designing a computer application which could automatically identify vulgar text. During this venture, I created an improved list of vulgarity words by cross-referencing some online collections, and extracting information about the words' degree of vulgarity and how they are used. I called this improved list the baseline model, the performance of which was tested on 400 random human annotated YouTube comments. The results showed that the machine was quite good at picking out vulgar comments.

However, there is plenty of room for improvement. For example, the information about the usage and the degree of vulgarity could be refined with experiments (e.g. I only used the data available on The Online Slang Dictionary for the purposes of this blog post). And we shouldn't forget that the baseline model, which reflects the average "typical" cases, might not be good enough for many applications. To overcome this limitation, the model should be customizable to individual needs. But that's a topic for another blog post, so stay tuned.

Related posts

Machine learning is a powerful method for solving various natural language processing tasks, but collecting the training corpus can be a difficult job.

We at belfry.io believe that comment feeds are an important thing. We want to make them better, and more constructive.