Belfry.io - Building a training corpus of comments

Machine learning is a powerful method for solving various natural language processing tasks, but collecting the training corpus can be a difficult job. If we are to build a model that is not specific to a small subculture (like one subreddit) then we have to make sure our corpus contains samples from as many sources as possible. Every subculture, topic, or even platform has its own slang and the way they use the language can be different.

Another problem is labeling the training input. The most precise, but also the most time-consuming method is the manual labeling. A corpus entirely labelled by a human workforce is called a gold standard corpus.

Another way is to generate the labels with some automatic method. This is called a silver standard corpus. Silver standard corpora may not be as precise as the gold standard, but one can utilize a far bigger training corpus using this method. So there is a tradeoff between the two methods here, and one needs to understand the specific task to choose one over the other, or try some mixture of the two methods. Generally speaking, if the labels contain heterogeneous cover samples, (e.g some broad topic, like all comments that are about latino people no matter if it's about cooking or immigration policy), a successful model needs a lot of training data, and since it's often not possible to build a big enough gold standard corpus, it can only support the bigger silver standard corpus (e.g. for validation).

While collecting the documents that will make up the corpus, it’s a good idea to go into it with some initial knowledge about what the document’s labels will. This helps in labeling when making a gold standard corpus, and it's vital when building a silver standard. Here's some ideas for collecting comments when we have a preference for their topic.

Looking up sites manually

We can manually look up sites, forums, etc that are somehow related to the topic we are looking for, and assume that the comments there will belong to the given topic in an above average ratio. The biggest drawback of this approach is that the list of the sites crawled with this method can be heavily biased by the people who made it. It's also a time-consuming method, since a lot of these sites or forums have a custom comment engine, and one must write a different crawler for each one.

Sites labeled by others

The DMOZ database contains websites labeled by human editors, by topics. A lot of these sites allow comments. However, it is a moderated list, so certain inappropriate sites will not make it in the list. If one is looking for these kind of comments, then DMOZ/link to dmoz.org/ may not be the best way to collect them. There's also the problem of crawling from different comment engines, as mentioned above.

Youtube

It's possible to use youtube for collecting comments by their (assumed) topic since youtube's algorithms determine the topic of all uploaded videos by the keywords and description. When the amount of quality videos in a given topic exceeds a certain threshold, youtube automatically creates a channel and tries to create playlists by subcategories. This makes it easy to collect a huge amount of youtube comments. One drawback is that youtube is just one platform with it's own culture and slang.

Using subreddits, it's easy to get a huge amount of well fitting comments for a label. One can look up subreddits with Reddit's search feature, or use the list of subreddits by topic. Reddit has a good API too, making it easy to crawl the comments. However, Reddit's “terms of service” is stricter than youtube's, so it's important to read them carefully.

Commenters

If you are looking for non-english comments, then methods 2-4 are not really useful. In that case, the snowball method can help. Given a platform that is popular amongst the target user base (e.g. Disqus, Youtube, or any similar local platform) one can look up a few Youtube videos, Disqus sites, etc, in the given topic and check where else the commenters made comments. Adding these sites to the list, and continuing with the process can yield a lot of comments - however the ratio of comments that are actually in the desired label will be lower than with other methods, and it can't completely eliminate the problem of bias, from manually choosing the initial forums.

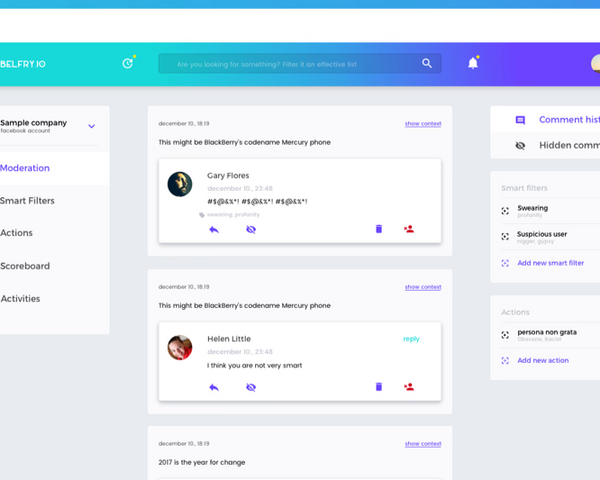

It's important to mention that moderation can distort the result of all methods discussed above. If you are developing models that are affected by this phenom (like we are doing at belfry.io) you have to take great care in limiting its impact on the models.

Related posts

Dirty words. We all know them. They are inappropriate. In this blog post we concentrate on the issues that one should address before building a system which automatically identifies and categorizes vulgar language in texts.

We at belfry.io believe that comment feeds are an important thing. We want to make them better, and more constructive.